This article originally appeared on HCDExchange.

Human-centred design (HCD) is increasingly being used as a complementary approach to traditional global health programming methods to design and optimise innovative solutions. HCD has a unique ability to bring new ideas to entrenched problems, integrate multiple stakeholder perspectives, and bring in a strong human lens. Differences between conventional evaluation approaches and the inherent fluidity that is required for HCD and adaptive implementation invariably makes it challenging for practitioners to think about how to best evaluate HCD or adaptive programs.

To address this knowledge gap, our webinar examined some of the roadblocks in evaluating health projects rooted in iterative approaches with experts from PSI’s Adolescents 360 (A360) , YLabs’ CyberRwanda and Vihara Innovation Network.

Program interventions such as PSI’s A360 and YLabs’ CyberRwanda’s use HCD and adaptive implementation approaches to ensure that they are designing and implementing the right solutions.

Dive into webinar highlights and engaging discussion questions below!

The HCD process paves a path toward implementation and evaluation by ensuring that the ultimate intervention is desirable among many key stakeholders, and is feasible for implementation and scale-up. Using the adaptive implementation approach, the findings from routine user testing informs iterations to the program.

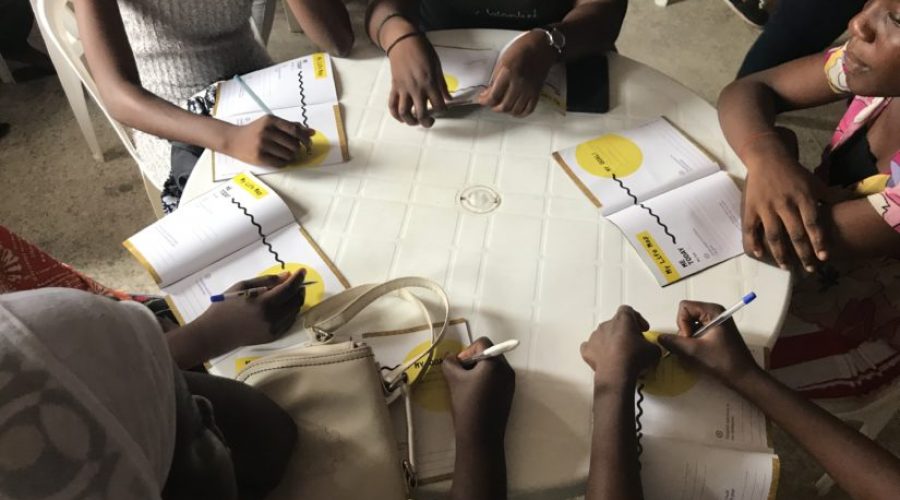

Applying HCD during the implementation and evaluation stages of the intervention lends itself well to adaptive learning and iteration; allowing for new information to be continuously introduced. This is because HCD is inherently collaborative. For instance, CyberRwanda has been able to use interviews, focus group discussions, and co-design sessions to better understand the quantitative data.

For A360, HCD and adaptive implementation approaches are complimentary.

“We’ve found the two approaches very complementary. They rely on a culture of curiosity and ‘human-centeredness,’ that harnesses diverse perspectives to elicit and interpret insights and evidence, employ a collaborative and iterative approach to generating solutions, and entrust those closest to the point of impact to lead,” Matthew Wilson, Project Director, A360

Chiefly, HCD may help to support the long-term sustainability and cost-efficiency of the intervention by addressing implementation challenges early and often, rather than relying solely on endline results.

-

- The design of the intervention & the implementation strategy is not known at the outset; ranging from who the intervention is directed at, how it will be implemented, where it will be implemented, and with what intensity it will be implemented.

- Practitioners cannot assume consistency in the intervention design and implementation strategy as it evolves over the life of the project.

- Documentation of the iterative design process, decision making, testing along the way is either absent or incompatible with what is an acceptable measurement metric.

- Limitations with financial resources and almost linear sequencing of each discipline.

- A tenuous relationship between different disciplinary teams, each silently questioning the validity of the other.

- Differing learning priorities and styles of different disciplines, thus limited common language

- Historically designers have limited understanding and no incorporation of measurement priorities in the design process

“The dream would be to see these disciplines kind of bring their individual strengths to bear but for the boundaries to blur, small cross-disciplinary, hybrid design and measurement teams working on a day to day basis together, optimising implementation strategies for outcomes.” Divya Datta, Director of Design Strategy and Innovation, Vihara Innovation Network

- Keeping the ‘essence’ of the intervention in mind: Through finding the balance between adaptation and staying true to the ‘essence’ of the intervention, drifting can be avoided, despite constant iteration. This requires a shift in the mindset of implementers, becoming systematic, and an investment in capabilities.

- Evaluation designs need to be responsive and adaptive too: This has implications for implementers, evaluators and donors. This means implementers and evaluators would benefit from having a high touch engagement and open communication so there is an intimate and timely understanding of the intervention design and implementation strategy.

- Implementers also need to have a robust understanding of evaluation protocols: This will enable them to understand the implications of potential or actual changes in the intervention design or implementation strategy.

- Implementers need to manage adaptation effectively: This will enable them to maintain fidelity to the ‘essence’ of their interventions and avoid drift.

- Think beyond outcome evaluations: Outcome evaluations essentially tell two parts of the story – the baseline and the endline. But we also need process/developmental evaluations that help tell the story of what’s happened in between.

- Evaluators need to be resourced to reconcile results emerging from different evaluation approaches: the sense making, with more emphasis placed on evaluating how well a programme learns and adapts.

- Enhancing the understanding of designers and design teams to understand and factor for indicators of program success. For example by involving design teams in program shaping discussions that are grounded in the Theory of Change (ToC) and exposing them to the articulation of a ToC and the definition of program intermediate outcomes, milestones and metrics.

- Promoting collaboration between design and research teams to find the right balance. This collaboration will help project teams to identify and align on key research objectives, using both quantitative and qualitative data, to measure the impact of the project and identify the current challenges.

- Use of rapid and utilitarian measurement approaches in the design phases to inform creative hypotheses and prototype development in the design process and link efforts to indicators. For example, experiments in blending learning techniques and tools by drawing from HCD research and monitoring, evaluating and learning strategies to allow for the better measurement in design phases. This will guide combining rigour of measurement approaches and the rapidity of design learning cycles to build new learning models.

- Moving toward agile, adaptive and utility focused evaluations. One can only measure what one designs for. As such grant structures and resources are needed to enable designers to work with program teams in shaping monitoring and evaluation. This will create more emphasis on evaluating how well a programme learns and adapts. Measuring outcomes is obviously important, but wouldn’t it be powerful if the ability to learn and adapt was a measure of success explicitly valued by donors and implementers?

- Better documentation capabilities: Recording every change and adaptation made is important to be able to account for the change during the evaluation or data analysis process.

- Being adaptive in terms of the implementation is not enough, practitioners have to embrace flexibility with processes. Consequently, practitioners must ensure that the metrics used account for how adaptable a program is.

- Watch the webinar here

- Implementing adaptive youth-centered adolescent sexual reproductive health programming: learning from the Adolescents 360 project in Tanzania, Ethiopia, and Nigeria (2016-2020)

- Challenges and opportunities in evaluating programmes incorporating human-centred design: lessons learnt from the evaluation of Adolescents 360

- Monitoring and evaluation: five reality checks for adaptive management

- How to Monitor and Evaluate an Adaptive Programme: 7 Takeaways

- Randomised Controlled Trial (RCT) – Impact Study (2018-2021)

Speakers

- Anne Lafond, Director, Centre for Health Information, Monitoring & Evaluation (CHIME) & Senior Advisor, HCDExchange

- Matthew Wilson, Project Director, Adolescents 360

- Fifi Ogbondeminu, Deputy Project Director, Adolescents 360

- Hanieh Khosroshah, Senior Product Designer, YLabs

- Laetitia Kayitesi, Research Manager, YLabs

- Divya Datta, Director of Design Strategy and Innovation, Vihara Innovation Network

About Adolescents 360 (A360): Adolescents 360 is a girl-centred approach to contraceptive programming with a goal to increase girls’ voluntary uptake of modern contraceptives, while supporting them to choose the lives they want to live.

About CyberRwanda: CyberRwanda is a direct-to-consumer digital platform developed by YLabs and implemented by Society for Family Health, Rwanda (SFH) that provides information on health and employment and links youth to local pharmacies to improve access to contraception and other health products.

About Vihara Innovation Network: Vihara is an India based learning, innovation and Implementation Agency that has worked across 21 countries in the global south on Health and Wellbeing programs using techniques of behavioural science, human centred design and prototyping.[/vc_column_text][/vc_column][/vc_row]